10 Best Free Online Robots.txt Generator Websites

Here is a list of the best free online Robots.txt generator websites. Search engines use web crawlers aka search robots to check the content of the web pages. If you have a website, the pages of the website get crawled by search robots. A Robots.txt file helps you define the rules for web crawling on your website. This file contains directives with permissions to allow/restrict search robots to crawl the website. You can set a default permission for all the search robots and individually allow/disallow popular search robots. Similarly, you can define restricted directories/pages that you do not want robots to crawl like the admin panel. Along with that, this file can also contain crawl delay time and an XML sitemap of the website.

A Robots.txt is placed in the root directory of a website. A search engine reads that file and proceeds or avoids web crawling as described in that file. Apart from crawling, a robots.txt file can also help a search engine to better index the website. Making a robots.txt file is quite easy. Here are 10 websites where you can do it online within a couple of minutes.

These 10 websites offer robots.txt file generator tools. All these tools cover popular search robots. You can set crawling permissions for those robots individually. Then you can set default permissions for all the other crawling robots that are not covered. Some of these tools let you restrict certain directories from all search robots. Whereas some lets you configure which directories or pages (URLs) you want to restrict from which search robot. So you can explore the post to check these tools in detail. I hope this can help you find a tool to generate your customized robots.txt file.

My Favorite Online Robots.txt Generator

SEOptimer.com is my favorite Robots.txt generator on this list. This tool packs all the options you need to create a customized Robots.txt file for your websites. It includes crawl dealy and restricted directories as well. And in the end, you can either copy the Robots.txt content directly or export it to save the file locally.

You can check out our other lists of the best free Online UUID Generator Tool Websites, Online DMARC Record Generator Websites, and Online DKIM Record Generator Websites.

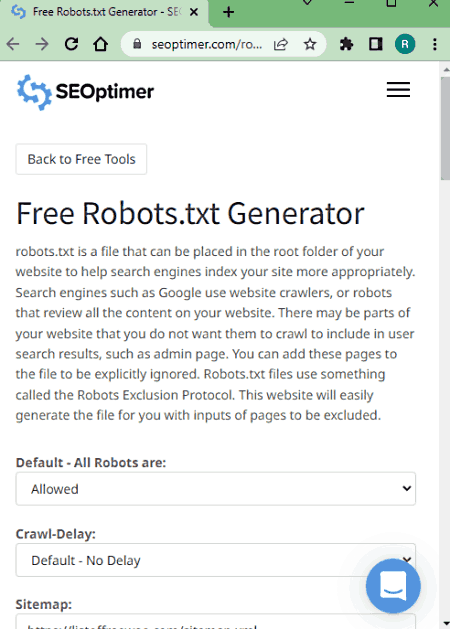

SEOptimer.com

SEOptimer offers a Free Robots.txt Generator. This tool covers 15 search robots covering Google, Google Image, Google Mobile, MSN, Yahoo, Yahoo MM, Asks, GigaBlast, Nutch, and more. You can start by setting the default status for the search robots. This way, you don’t have to set it individually for each search robot. Apart from that, you can add multiple directories that you want to restrict from crawling. With that, you can create the Robots.txt file. This tool has two options; Create and Create & Download. The Create option shows the content of the file on the screen. You can copy it to the clipboard from there. Whereas, the Create & Download option creates the file and downloads it to your computer.

How to generate Robots.txt online on SEOptimer?

- Go to this Free Robots.txt Generator using the link given below.

- Set the Default status to Allowed or Refused.

- Pick the Crawl-Delay and add the Sitemap URL (if available).

- Then set the status of search robots as per your needs.

- Define the Restricted Directories that you exempt from crawling.

- Then use the Create/Download button to generate and save the Robots.txt file.

Highlights:

- This tool covers 15 search robots that you can allow or refuse.

- It lets you add multiple restricted directories.

- Options to copy and download generated Robots.txt file.

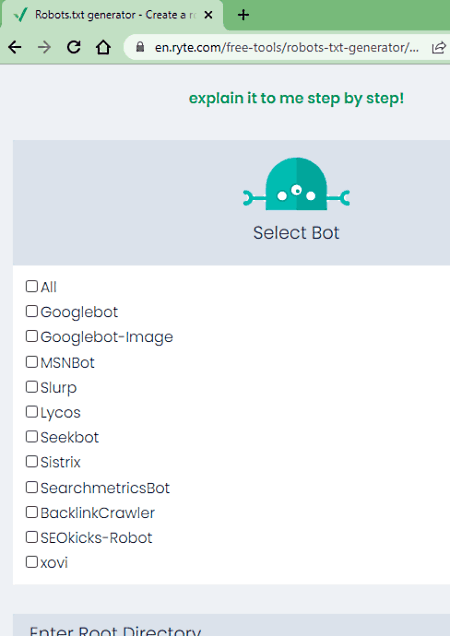

En.ryte.com

En.ryte.com has a free Robots.txt Generator tool. With this tool, you can quickly generate a Robots.txt file for your website. This tool starts with three options; Allow All, Disallow All, or Customize. the first two options simply generate a Robots.txt file where everything is allowed or disallowed respectively. And with the Customize option, you can select which search robots you want to allow/disallow on your website. Along with that, you also get options to add which URLs you want to allow and disallow for crawling. This way, you can quickly get a Robots.txt file for your website.

How to generate Robots.txt online on En.ryte.com?

- Follow the link given below to open this Robots.txt Generator.

- Pick a permission option to create your file. Go with Allow All, Disallow All, or Customize.

(For Allow All and Disallow All, you get the file right away, for Customize, follow along) - Set the status of search robots as per your requirements.

- Enter the Root Directory of the website along with the Allowed URL and Disallowed URL.

- Then check the checkbox and click the Create button to download the Robots.txt file.

Highlights:

- This tool covers 11 search robots that you can allow or disallow on your website.

- It lets you define the allowed URL and disallowed URL (restricted directories).

- No option to add crawl delay.

- Options to copy and download the generated Robots.txt file.

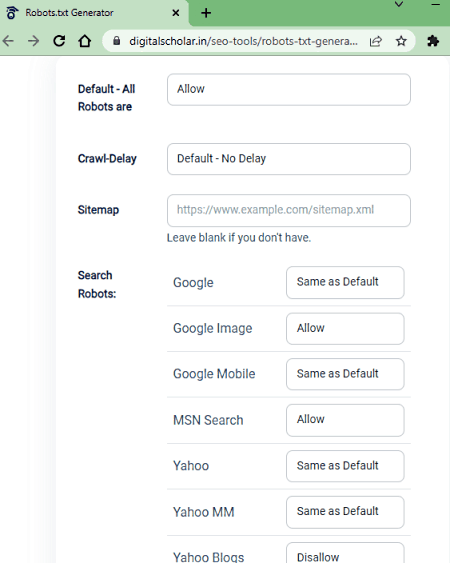

DigitalScholar.in

DigitalScholar offers a free online Robots.txt Generator tool. This tool also covers 15 popular search robots. You can either pick the status for each search robot separately. Or, you can set a default status and then change it only for the specific search robots as per your needs. If you want to add a crawl delay to your website then you can do that. Apart from that, you can mention the folders that you do not want to get crawled by the search robots and create the robots.txt file. This tool shows the content of the file on the screen with a copy button next to it. It also offers an option to export the file locally.

How to generate Robots.txt online on DigitalScholar?

- Open this Robots.txt Generator using the link given below.

- Set the Default status to Allowed or Disallow.

- Pick the Crawl-Delay and add a Sitemap URL.

- Then set the status of search robots as per your needs.

- Add the Disallow Folders that you exempt from crawling.

- Then click the Generate button to get the Robots.txt file.

Highlights:

- This tool covers 15 search robots that you can allow or disallow.

- It lets you add multiple folders to remove from crawling.

- Options to copy and export generated Robots.txt file.

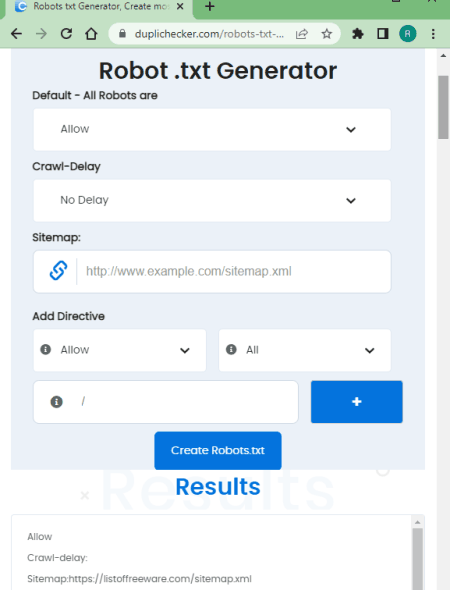

DupliChecker.com

DupliChecker is another free website where you can create a Robots.txt file. It starts by asking the default permission for all the search robots. You can set that permission as per your needs. Along with that, you get options to configure crawl delay and add a sitemap. After these basic requirements, it lets you add directives. This feature lets you permission individually for a search robot. It covers 25 search robots. You can pick a robot, define the permissions and add a directory accordingly. This way, you can easily create a Robots.txt file on this website.

How to generate Robots.txt online on DupliChecker?

- Use the link given below to access this tool in your browser.

- Set the default status for all search robots and pick a crawl delay if needed.

- Then using the Add Directive option, set permission, and select the search robot from the list.

- Add a restricted directory if you want.

- Similarly, follow the above 2 steps to add more custom permissions.

- In the end, click the Create Robots.txt button to get the file.

Highlights:

- This tool covers 25 search robots that you can allow or disallow on your website.

- Option to add directives with permission and directory.

- Options to copy and download the generated Robots.txt file.

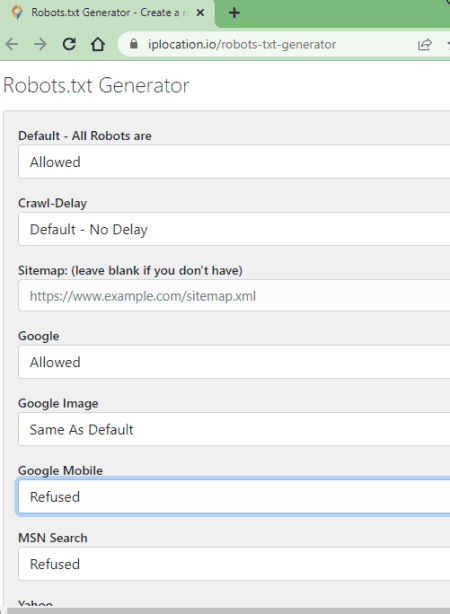

IPLocation.io

IPLocation is another free website where you can generate a Robots.txt file. It has a simple tool where you can add your preferences and generate the file. You can set a default status for search robots and marks those that you don’t want to crawl your website. You can also add crawl-delay if you want and define the directories that you want to restrict for crawling. With that, you can generate the Robots.txt file. You can either copy the file content directly or export it to save the file locally.

How to generate Robots.txt online on IPLocation?

- Follow the link given below to this Robots.txt Generator tool on IPLocation.

- Set the Default status to Allowed or Refused.

- Pick the Crawl-Delay and add the Sitemap URL.

- Then set the status of search robots as per your needs.

- Define the Restricted Directories that you exempt from crawling.

- Then click the GENERATE NOW button to get the Robots.txt file.

Highlights:

- This tool covers 15 search robots that you can allow or refuse.

- It lets you add crawl-dealy and multiple restricted directories.

- Options to copy and export the generated Robots.txt file.

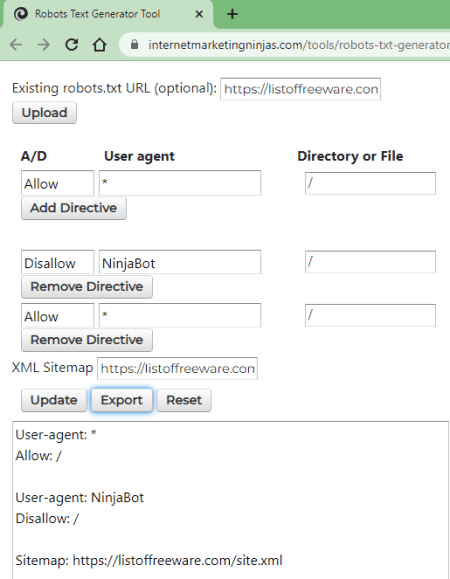

InternetMarketingNinjas.com

InternetMarketingNinjas offers a free Robots.txt generator tool. This tool lets you generate a robots.txt file for your website. If you already have a robots.txt file then you can load that file and make changes to that. The process is simple. It lists 20+ search robots. You can pick a robot and set the permission along with the directory. This way, you can add the desired robots to your file. And if you want to remove a robot that s present in the file, you can do that as well using the “Remove Dievtive” option. Just like that, you can create or update your robots.txt file.

How to generate Robots.txt online on InternetMarketingNinjas?

- Follow the link given below to access this tool in your browser.

- Upload or fetch your robots.txt file if you want to update that.

- Set the permission and pick a search robot from the list. Add directory if needed.

- Then click the Add Directive button and follow the same to add more robots.

- In the end, click the Create Robots.txt button to get the file.

Highlights:

- This tool can update existing Robots.txt or create a fresh file.

- It covers 20+ search robots that you can allow or refuse.

- Option to add directives with permission and directory.

- No option to configure crawl delay.

- Options to copy and save generated Robots.txt file.

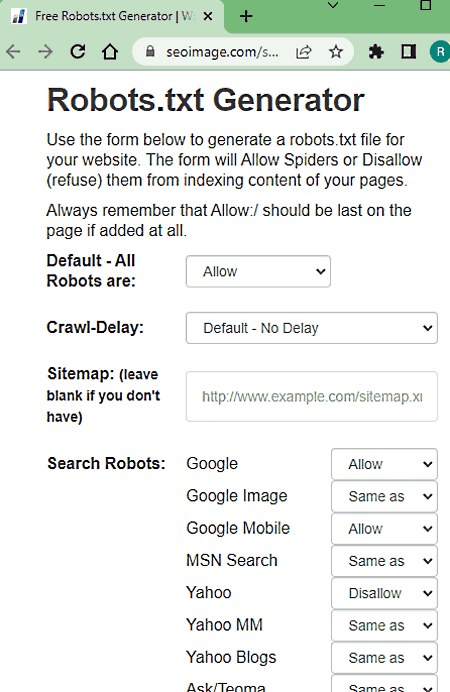

SEOImage.com

SEOImage offers a free tool to generate Robotos.txt file online. This tool is quite simple and covers 15 popular search robots. You can set which search robots you want to allow and disallow on your website. You can also set a default permission for the search robots. If you don’t want a search robot to crawl specific files of your website then you can add that directory into restricted directories. With that, you can generate the Robots.txt file for your website.

How to generate Robots.txt online on SEOImage?

- Follow the link given below to this Free Robots.txt Generator tool.

- Set the Default status to Allowed or Disallow.

- Pick the Crawl-Delay and add the Sitemap URL (if available).

- Then set the status of search robots as per your needs.

- Define the Restricted Directories that you exempt from crawling.

- Then use the Create/Save button to generate and save the Robots.txt file.

Highlights:

- This tool covers 15 search robots that you can allow or refuse.

- It lets you add multiple restricted directories.

- Options to copy and download generated Robots.txt file.

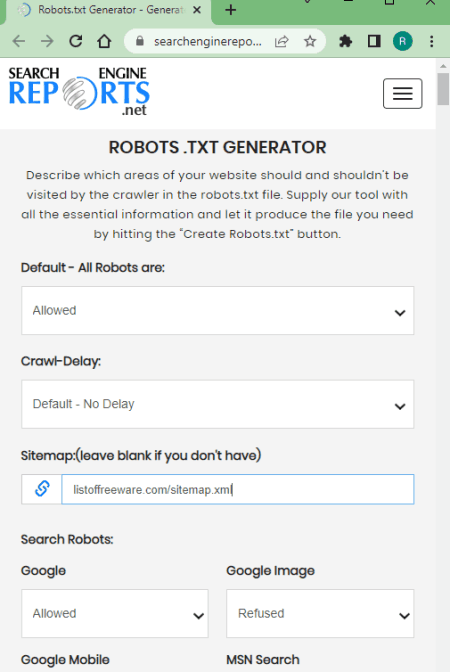

SearchEngineReports.net

SearchEngineReports offers a free online Robots.txt Generator. You can use this tool to make a Robots.txt file for your website. It lets you pick default permission for search robots crawling your website. Then you can allow or refuse popular search robots as per your needs. Apart from that, you can add crawl-dealy and mark the directories of the website that you do not want any search robot to crawl. This tool generates the Robots.txt data and shows that on the screen. From there, you can copy the data to the clipboard or export it to a TXT file.

How to generate Robots.txt online on SearchEngineReports?

- Go to this Robots.txt Generator using the link given below.

- Set the Default status to Allowed or Refused.

- Pick the Crawl-Delay and add the Sitemap URL (optional).

- Then set the status of search robots as per your requirements.

- Add the Restricted Directories that you exempt from crawling.

- Then use the Create Robots.txt button to generate and save the Robots.txt file.

Highlights:

- This tool covers 15 search robots that you can allow or refuse.

- It lets you add crawl delay and multiple restricted directories.

- Options to copy and download generated Robots.txt file.

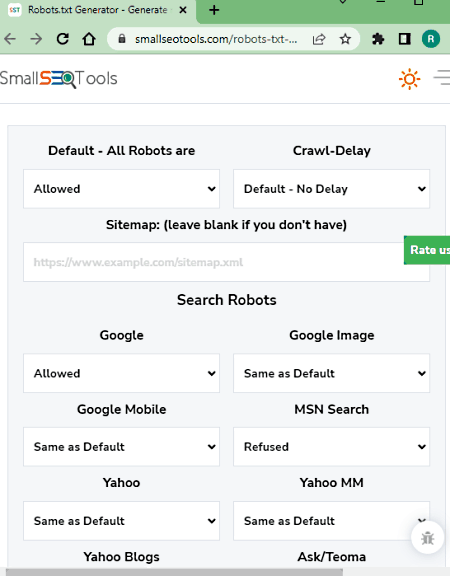

SmallSEOTools.com

SmallSEOTools is another free website to generate Robots.txt online. The process of generation is quite simple and similar to other such tools. You can define if you want the search robots to crawl your websites or not. You can set default permissions or pick different permissions for different search robots. If you want to exempt something from crawling then you can add that under the restricted directory. This simple tool generates the Robots.txt file with permissions. You can copy it to the clipboard or export it to a TXT file and save it on your computer.

How to generate Robots.txt online on SmallSEOTools?

- Use the link given below to open this Robots.txt Generator.

- Start with setting the Default status to Allowed or Refused.

- Then pick the Crawl-Delay and add the Sitemap URL (optional).

- After that, set the status of search robots as per your requirements.

- Add the Restricted Directories that you exempt from crawling.

- Then use the Create Robots.txt button to generate and save the Robots.txt file.

Highlights:

- This tool covers 15 search robots that you can allow or refuse.

- It lets you add crawl delay and multiple restricted directories.

- Options to copy and export the generated Robots.txt file.

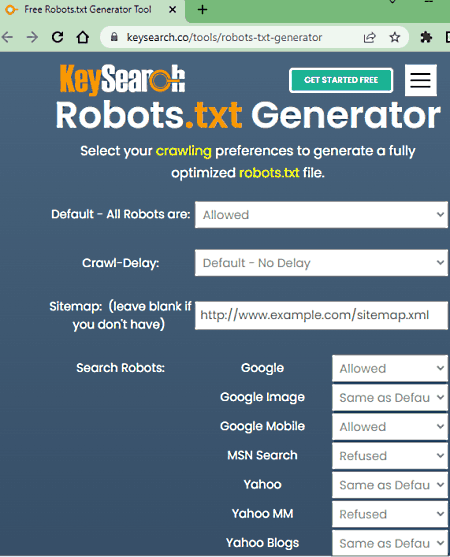

KeySearch.co

KeySearch is yet another website with an online Robots.txt generator. This tool lets you generate a Robots.txt file to allow/blow search robots on your website for web crawling. It lets you set a default permission for all the robots. On top of that, you can individually set permissions for the popular 15 search robots. If you want to skip any page of your website from crawling then you can add the directory of that page under restricted directories. This way, you can generate the robots.txt file with desired configurations.

How to generate Robots.txt online on KeySearch?

- Open this Robots.txt Generator using the link given below.

- Set the Default status for all robots.

- Configure the Crawl-Delay and add the Sitemap URL (if available).

- Then set the status of search robots as per your needs.

- Define the Restricted Directories that you exempt from crawling.

- Then use the Create/Download button to generate and save the Robots.txt file.

Highlights:

- This tool covers 15 search robots that you can allow or refuse.

- It lets you add multiple restricted directories.

- Options to copy and save generated Robots.txt file.

About Us

We are the team behind some of the most popular tech blogs, like: I LoveFree Software and Windows 8 Freeware.

More About UsArchives

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- July 2019

- June 2019

- May 2019

- April 2019

- March 2019

- February 2019

- January 2019

- December 2018

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- February 2018

- January 2018

- December 2017

- November 2017

- October 2017

- September 2017

- August 2017

- July 2017

- June 2017

- May 2017

- April 2017

- March 2017

- February 2017

- January 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- March 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- August 2015

- July 2015

- June 2015

- May 2015

- April 2015

- March 2015

- February 2015

- January 2015

- December 2014

- November 2014

- October 2014

- September 2014

- August 2014

- July 2014

- June 2014

- May 2014

- April 2014

- March 2014