20 Best Free Web Scraper Software For Windows

Here is a list of the best free web scraper software for windows. These software prove out to be very useful when you have to work with large amounts of data. Some of these software are quite basic and require no extra knowledge on your part, while others require you to have knowledge of Regular Expressions, and knowledge in some other fields as well like JavaScript. The data that is useful to you is beautifully sorted out. Some of these also let you automate the task so that the data is automatically retrieved with the click of a button. A time period can also be specified so that the data is updated after an allocated time limit.

Best Free Web Scraper Software:

The web scraper software that I like the most is Web Scraper. It is due to the fact that it is quite simple, lightweight, and easy to use. You can use it to scrape different types of data from the web like link, text, table, and many more such elements. Here you can also add multiple URLs. It also generates a selector graph. The scraped data can be exported in the CSV format. You can also choose the option to store the data on local storage or CouchDB.

You can also check out the list of RSS Reader Software, Website Downloader Software, and Broken Link Checker Software.

Web Scraper

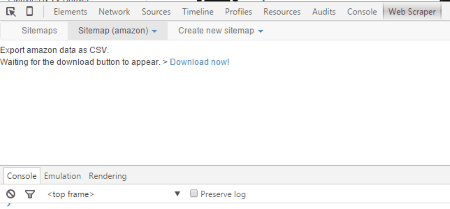

Web Scraper is a Chrome plugin which is used for scraping data from a website. It is a good web scraping software where you can get different types of data information, like: text, link, popup link, image, table, element attribute, HTML, element, and many more. In order to use the Web Scraper feature, you have to undertake the following steps:

- Install the Chrome Extension.

- Go to the developer tools by clicking F12 or Ctrl+Shift+I.

- Browse to the Web Scraper tab.

- Click on the button create new sitemap and add the sitemap name and start URL.

- Multiple URL can be added here by clicking the + button.

- In case you want to work with the existing sitemap, enter the sitemap JSON, and sitemap name.

- Then after that the sitemap can be easily imported.

- On creating the sitemap, choose the option to add new selector.

- Here you have to add selector ID, type, selector, regex, delay, and parent selector.

- The selector type may be either text, link, popup link, image, table, element attribute, HTML, element, element scroll down, element click, or grouped.

- Based on the attributes of the sitemap, a selector graph can be generated.

- Click on the Scrape button to scrape the data.

- Then click the export data as CSV button which downloads the scraped data in CSV format.

- Sitemap can also be exported, metadata can also be edited, and selectors can be previewed edited, and deleted.

In the storage settings the storage options can be chosen which can be either local storage or CouchDB. In case you choose the option of CouchDB you have to enter the Sitemap db, and Data db.

Data Scraper

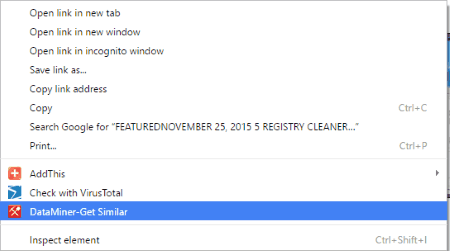

Data Scraper is another data scraping Chrome extension which can be used as a data mining software. Just undertake the following steps in order to successfully do so.

- Install the Chrome extension. This adds a context menu to Chrome.

- Go to the website from which you want to get the data.

- Select a sample of the content, right click, and select the context menu DataMiner-Get Similar.

- Then the scraper window is displayed where the complete similar data from the table is displayed showing the recipes, community recipes, containers, columns, next page navigation, and filters.

- Some column details can be added or removed.

- The data thus scraped can be either copied to the clipboard, and downloaded as CSV.

Under the next page navigation tab, you can choose the option to automatically navigate paginated pages. Here set the option of next page element Xpath, set the URL or click, and set the auto advance wait time. You can also choose the option to start auto advance. In case you wish to use the data miner on a number of websites like Google, ebay, LinkedIn, and many more such websites, you will need to login first.

OutWit Hub Light

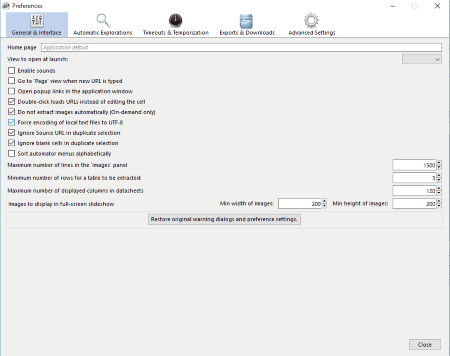

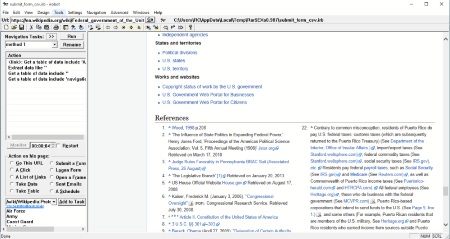

OutWit Hub Light is a very simple software which can be used to extract the data from websites.

- Simply enter the URL from which you want the data to be retrieved.

- Then the webpage is displayed.

- Choose the type of data that you want to extract, like: links, documents, images, contacts, data, tables, lists, text, words, news, source, automators, and many more such types of data.

- Based on the type of data that you choose, the data is extracted.

- The extracted data is displayed.

- You can choose the option to export the data where you can choose the option of which columns to import and export.

- The options can be set to select the rows with some specific attributes, and also limit options.

- The options can be set to catch on demand, auto catch, empty on demand, and auto empty.

- Here you can also view the source file and export the catch as Excel, JSON, CSV, SQL, XML, HTML, and many more.

- You can also select the feature of fast scrape.

Visual Scraper

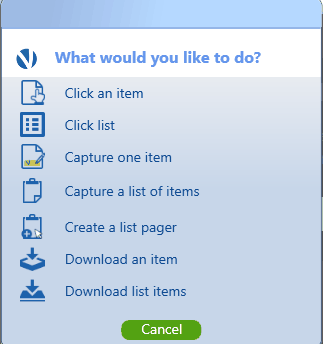

VISUALSCRAPER is a simple web data extraction software which can be used for extracting different types of data. It is quite simple to use, and in order to do so you have to undertake the following steps:

- Register into the website and download the software.

- Then after installation you have to login to the software again.

- Enter the link from which you have to extract the data.

- Then choose the option to click the item, click the areas, select the data type that you want to extract, classify it in different data types, and you are good to go.

- You can also use it to capture text or image, set user input, capture list of items, and many more such tasks.

- You can also choose the option to capture default value, capture screenshot, clear browser cookies, load a new page, refresh the page, and choose scroll action options.

- Further in the settings tab you can choose the option to login automatically, disable image downloads, disable browser zoom, and many more such options.

IRobot

IRobot is a good software which can be used for screen scraping. In order to know how to scrape a website using this software, you have to undertake the following steps.

- Input the URL from which you wish to download the data.

- Select the option to run navigation task, and create your own list of navigation tasks if required.

- Different actions can be performed on the page where choose the option to extract different types of data, like: list of links, take data, take table, submit form, logon form, open frame, sent emails, and make a schedule.

- The data thus generated can be copied to clipboard, or downloaded as CSV.

- Along with this, you can also perform different actions like generate robots in order to automate your tasks.

- The log file is also generated so that you can monitor your task at any moment.

- Here you can also select the navigation speed, pop up, silent browsing, and many more such options.

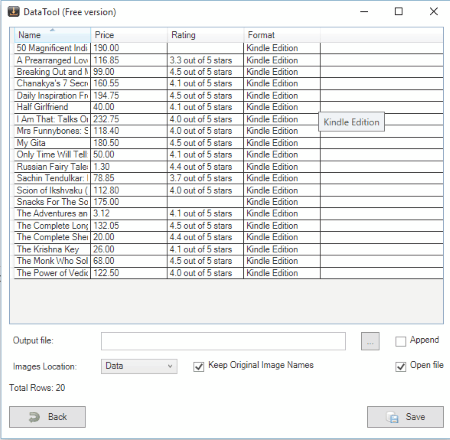

DataTool

DataTool is a simple and easy to use free web scaping software.

- Just install the software and then browse to the website from which you want to scrape the data.

- Click on the DataTool button which opens a window.

- Then here you can choose the option to add settings to browse the content.

- In the actions tab, click on the “new action” button.

- Set the selection mode which can be either add content mode, set next element mode, or turned off.

- Select the elements containing the data that you want to download, and enter its details.

- Then click the option Get Data.

- Then the data is collected and displayed in the form of table.

- Then you can also choose different options here, i.e. output file name, image location, total rows count, option to amend, and either to keep the original image names or not.

- The data can be saved in different file format, like: CSV Unicode, Excel, SQL script, SQL, XML, and HTML.

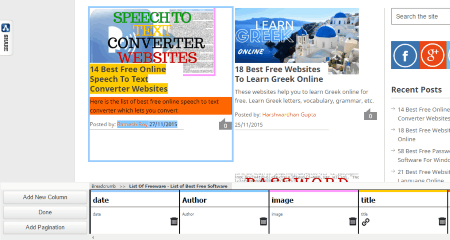

GetData.IO

GetData.IO is a simple Chrome extension which can be used to scrape data from the websites.

- You have to register into the website first.

- After you install the extension, browse the website and click on the GetData.IO button.

- Then at the bottom the option is provided where you can add columns.

- Name the column, and choose the details by clicking on the appropriate data type.

- Click on done button, after which you are taken to the website where the list is generated.

- Here you can view the crawler setup detail, crawler definition, crawler data preview, and crawler preview log.

- You can also pause the data crawler anytime you wish.

- Click the save button to download the data file as CSV, JSON, or HTML.

- You can also view the crawler setup detail, crawler definition, and linked data sets.

- The data results can be further edited or deleted as per the requirement.

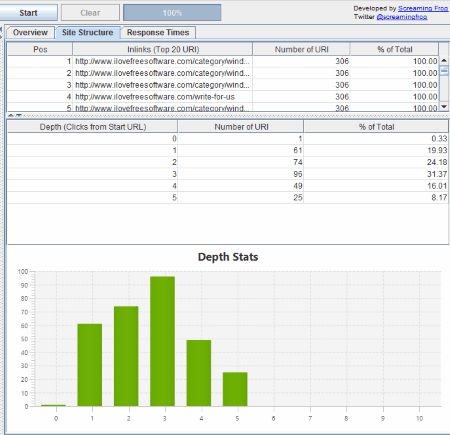

Screaming Frog SEO Spider

Screaming Frog SEO Spider is a simple software which is used to scrape data from the websites, primarily for SEO purposes. The free version has a limit of scraping 500 URLs.

- In order to use this you simply have to enter the website URL, and then the result is displayed.

- The generated data is thus automatically generated and classified under different tabs like links, H1, H2, meta keywords length, path, address, content, and so on.

- Choose the view which can be either tree or list.

- You can also view different types of data items available by choosing the tabs Internal, External, protocol, response codes, URI, page titles, meta description, meta keywords, H1, H2, images, directives, AJAX, custom, analytics, and search console.

- Different information is also displayed like response times, site structure, and overview.

- You can also view the URL info, in-links, out-links, image info, and serp snippet.

- You can also export the sitemap as CSV, XLS, or XLSX.

OpenOffice Calc

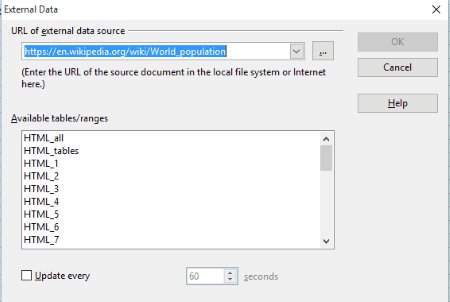

OpenOffice Calc is a popular spreadsheet application which can also be used to scrape data from the web. It is quite simple and easy to use. In order to avail this feature you have to undertake the following steps:

- Open OpenOffice Calc. Go to insert tab, and select the option “link to external data“.

- Enter the URL of external data source and press enter.

- Then the available tables/data ranges are displayed.

- Select the appropriate list that you want to import.

- Choose the option of auto update if required, in order to keep the list updated from online data.

- You can also set the update time duration.

- Then you can also add your own data and choose different options available.

- Then the data can also be exported in various popular formats like: PDF, HTML, ODS, XML, CSV, XLS, and many more.

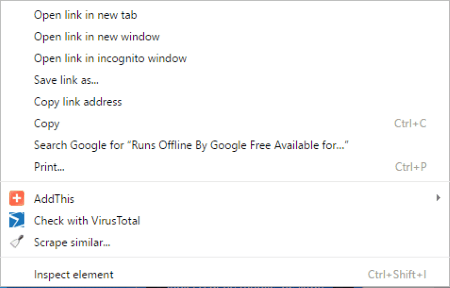

Scraper

Scraper is a simple tool to scrape data from the websites. In order to do that you have to undertake the steps specified below:

- Install the Chrome extension. This adds a context menu to the Chrome.

- Browse the website from which you want to get the data.

- Select a sample of the content, right click, and select the context menu Scrape similar.

- Then the scraper window is displayed where the complete similar data from the table is displayed showing the selectors, columns and filters.

- In case you want to add or remove some column details it can be done so.

- You can also save presets to save them in future.

- The data thus scraped can be either copied to the clipboard, or exported to Google Docs.

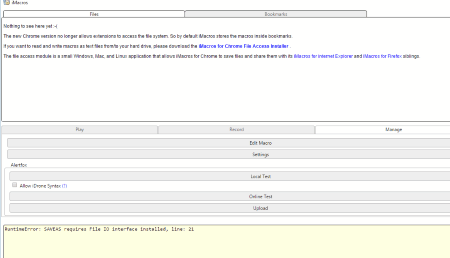

iMacros

iMacros is a very popular software for web scraping. It was originally available as an extension for Firefox, but is now available for Chrome and IE as well. This is a very simple extension that lets you “teach” what to scrape and how to scrape. Essentially, you navigate to a page, perform the actions that you need to perform, and this extension will record all that. Then it can repeat all those actions at the click of button. To make full use of the power of this, you can see the recorded script, and edit it as required. For example, you can add variables in the script, that will prompt you to enter values when you start running the extension. The extension is so popular that you can easily find scripts on the web for performing popular tasks.

WebHarvest

Web-Harvest is a good Java application which can be used to extract data from the website. In order to do so, you have to undertake the following steps:

- Create a new config file. Enter the code that you want to enter and save the configuration file.

- Run the file and based on the code that you entered, the data will be retrieved.

- Based on the type of data you can view it in different formats, like: text, image, XML, HTML, and many more.

- You can also modify extraction settings and make use of some of the predefined configuration files, and run it.

- You can also modify the settings to show hierarchy panel, log panel, line numbers, and dynamically locate processors in real time.

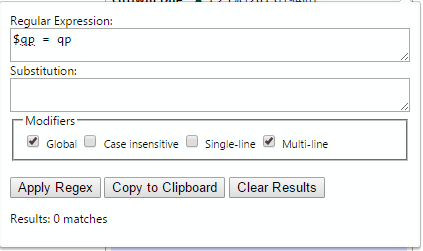

Regex Scraper

Regex Scraper is a simple Chrome extension which makes use of regular expressions in order to extract data from the websites. In order to make use of this feature you must have a basic idea of the regular expressions.

- Install the Chrome extension and browse to the website from which you wish to extract the data.

- Then click on the Regex Scraper plugin button.

- Input the regular expression that you want to run.

- If required you can also choose the option of substitution.

- You can also apply different modifiers like: Global, case sensitive, single line, and multi line.

- Then click on the button apply Regex.

- After the results are displayed choose the option to copy to the clipboard, or clear the Regex.

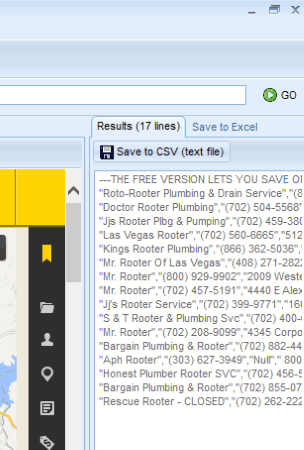

YellowPageRobot(YPR)

YellowPageRobot(YPR) is a simple software which helps you to extract data from Yellow Pages and from other websites as well.

- As you open the software, it automatically opens the Yellow Pages page.

- You can also add your own website link by providing the URL.

- Browse to the webpage from which you want to extract the data.

- Then click on the capture button which captures the data from the website.

- The captured data can be then saved as CSV, or Excel.

- Here you can also choose the option to not load the pictures by clicking the No Pix button.

- In the free version you can capture data from a single page only.

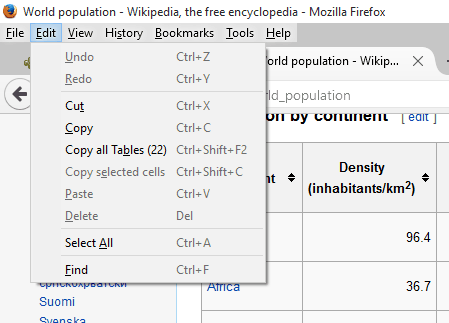

Dafizilla Table2Clipboard

Dafizilla Table2Clipboard is a simple Firefox extension which helps you to extract tables from a webpage. It adds a context menu to the edit tab and the browser context menu.

- Browse to the website and click on the edit tab.

- Here you are provided with the option to copy all tables.

- Using the Ctrl tab and clicking with the mouse you can select individual cells, or a group of cells.

- In the browser context menu you are also provided with the option to copy whole table, select row, select column, and select table after you click on the table.

- The formatting remains as it is when copied.

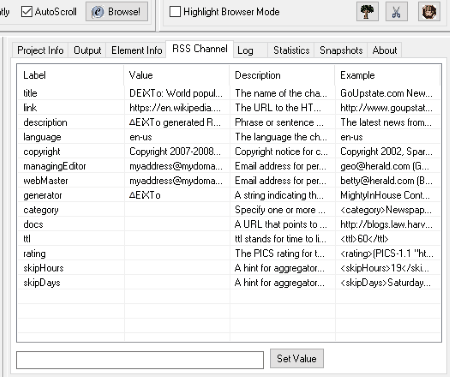

DEiXTo

DEiXTo is a simple and feature rich web data extracting software. It is also quite easy to use.

- Just enter the website URL, and click on the Browse! button.

- As you do so you are displayed different details like DOM tree, auxillary Record Instance, Record Instance – Working Pattern, and so on.

- Here you can also choose the extraction pattern and apply the same in order to download specific data.

- Then you can also save your data as TXT.

- Some HTML tags can also be ignored while building the DOM tree.

- Different tabs can be used to view output and modify its different properties.

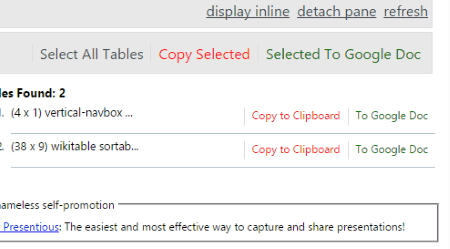

Table Capture

Table Capture is a simple Chrome extension where you can choose the option to copy the data in the table directly from the websites. In order to do so you simply have to undertake the following steps:

- Install the Chrome extension and browse the website.

- In case any table is found on the webpage the color of the extension changes to red.

- On clicking the extension you are displayed with the number of tables available.

- You can also are provided with the option to either copy the table to the clipboard, or to Google Doc.

- You can also choose different options to either display online, and detach pane.

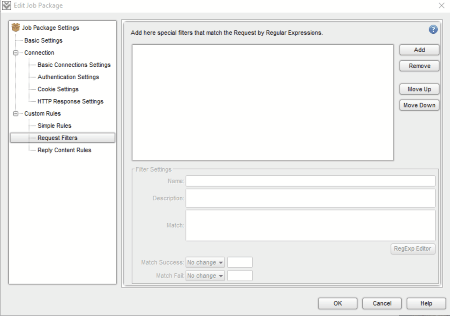

Darcy Ripper

Darcy Ripper is a simple Java application that is used to scrape data from websites. In order to use this feature you need to have a knowledge of the Regular Expressions.

- Go to the create new button and enter the details of the website.

- Under the custom rules tab add the regular expressions that you want to apply under the request filters tab, or reply content rules tab.

- Then the data is scraped and can save it as DJP.

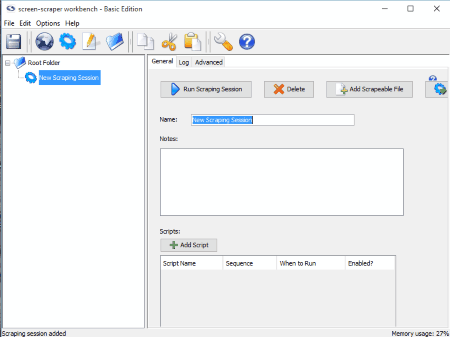

screen-scraper workbench

screen-scraper workbench is a software which helps you to scrape data from the websites. In order to do so you have to simply undertake the following steps specified below:

- Choose the option of new scraping session.

- Add different scraping properties, like: Name, URL, sequence, and script.

- You can also add different parameters, and extractor patterns.

- An option is also provided to view the last request and last response.

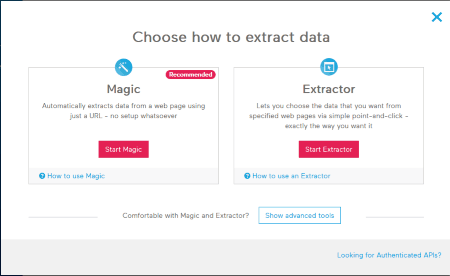

Import.io

Import.io is a very powerful data scraping software. Once you download the software, it will ask you to browse the page from where you want to download data. After that, you will need to highlight the fields that you want to download. Based on that, it will find similar data on the page and will let you download all of that. It also supports pagination. Once you have taught import.io how to work, you can just rerun the script to get the latest data. It supports large volumes of data, and is still completely free.

About Us

We are the team behind some of the most popular tech blogs, like: I LoveFree Software and Windows 8 Freeware.

More About UsArchives

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- July 2019

- June 2019

- May 2019

- April 2019

- March 2019

- February 2019

- January 2019

- December 2018

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- February 2018

- January 2018

- December 2017

- November 2017

- October 2017

- September 2017

- August 2017

- July 2017

- June 2017

- May 2017

- April 2017

- March 2017

- February 2017

- January 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- March 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- August 2015

- July 2015

- June 2015

- May 2015

- April 2015

- March 2015

- February 2015

- January 2015

- December 2014

- November 2014

- October 2014

- September 2014

- August 2014

- July 2014

- June 2014

- May 2014

- April 2014

- March 2014